Usual disclaimer here: these are my notes to help me understand the problem and get a workable model of what’s going on into my head.

I’ve been leading the development of an application using the ArcGIS Runtime SDK for WPF version 10.1.1.0, an application designed to run on tablet devices running Windows 7 or 8. It’s essentially a desktop application optimised for touch screen use. The application uses MVVM supported by Caliburn.Micro.

The application is task driven, meaning it supports field workers in the completion of tasks allocated to them. In order to present the user with lists of tasks to be completed it is necessary for the application to query the local geodatabase via the ESRI APIs during a start-up initialisation phase. To our dismay this phase was taking a very long time, up to several minutes on some tablet devices.

A thorough investigation into potential bottlenecks provided insight into the use of the LocalServer, the local service classes (e.g. LocalFeatureService, LocalMapService, LocalGeometryService, etc.) and the ‘plain’ service classes (e.g. FeatureService, GeometryService).

What follows are the results of some lessons learned.

Tip 1 - Understanding the LocalServer, local service classes and the plain service classes

The LocalServer

The LocalServer is not too big a deal here. It’s just like having a web server running locally, a server that can be used to host REST services just like a conventional ArcGIS server. The first task is to get the server running. Now this can happen automatically:

“It is not necessary to work with the LocalServer directly. Instead, creating and starting the individual LocalService classes ( LocalMapService, LocalFeatureService, LocalGeocodeService, LocalGeometryService, and LocalGeoprocessingService) will start the LocalServer if it is not already running.” [1]

I prefer to take control of this and start the server myself using InitializeAsync [1]. In this application I used a Caliburn.Micro IResult along these lines:

public class InitialiseLocalServerResult : IResult

{

public event EventHandler<ResultCompletionEventArgs> Completed;

public void Execute(ActionExecutionContext context)

{

LocalServer.InitializeAsync(ServerInitialised);

}

private void ServerInitialised()

{

OnCompleted();

}

private void OnCompleted()

{

var handler = Completed;

if (handler != null)

{

handler(this, new ResultCompletionEventArgs());

}

}

}

So the LocalServer is just a local web server used to host REST services for you.

Local service classes

You create services on your LocalServer using the local service classes which can be found in the ESRI.ArcGIS.Client.Local namespace. There are number of local service classes available such as:

- LocalMapService - provides access to maps, features, and attribute data contained within a Map Package.

- LocalFeatureService - forms the basis for feature editing. The feature service is just a map service with the Feature Access capability enabled.

- LocalGeometryService - a special type of service not based on any specific geographic resource such as a Map Package but instead provide access to geometric operations.

- LocalGeocodeService – address and postcode searching etc.

- LocalGeoprocessingService - a local geoprocessing service hosted by the runtime local server.

Again I like to take control of when and how the local services are created on the LocalServer and in this application I used an IResult something like this:

public class StartLocalFeatureServiceResult : IResult<LocalFeatureService>

{

private readonly string _mapPackagePath;

public StartLocalFeatureServiceResult(string mapPackagePath)

{

_mapPackagePath = mapPackagePath;

}

public event EventHandler<ResultCompletionEventArgs> Completed;

public LocalFeatureService Result { get; private set; }

public bool HasError

{

get { return Error != null; }

}

public Exception Error { get; set; }

public void Execute(ActionExecutionContext context)

{

var service = new LocalFeatureService(_mapPackagePath);

service.StartAsync(ServiceStarted);

}

private void ServiceStarted(LocalService service)

{

if (service.Error == null)

{

Result = (LocalFeatureService)service;

}

else

{

Error = service.Error;

}

OnCompleted();

}

private void OnCompleted()

{

var handler = Completed;

if (handler != null)

{

handler(this, new ResultCompletionEventArgs());

}

}

}

If you are not using Caliburn.Micro the key point is that instantiating the LocalFeatureService and calling StartAsync on it causes a service to be started on the LocalServer. The use of map packages is outside the scope of this post but essentially the package contains the data to be exposed by the service.

Really useful properties on the local service classes are the URLs. You can use these in conjunction with the layer classes (e.g. FeatureLayer) or classes such as QueryTask.

So the local service classes encapsulate a REST service to be instantiated on your LocalServer.

Plain service classes

I think the biggest confusion arises over the difference between the local service classes (e.g. LocalFeatureService) and the plain service classes (e.g. FeatureService).

In fact the difference is quite straight forwards. Whereas the local service classes are used to create REST services on your LocalServer the plain feature classes are clients used to connect to and query the service running on the LocalServer. It’s as simple as that. The plain service classes are found in the ESRI.ArcGIS.Client namespace.

Tip 2 – Log the local server URLs when you start the LocalServer

I always write the URL of the LocalServer to a log file somewhere. If you grab that URL and paste it into your browser you will see a server management page that shows you exactly what services are running. Very useful if you want to make sure you are not starting more services than you need. Simply log LocalServer.Url and LocalServer.AdminUrl.

You’ll get URLs like this: http://127.0.0.1:50000/glMxtq/arcgis/rest/services. Note there’s a randomly generated string in there (‘glMxtq’ in this case). That’s different every time you start the server so you need to grab the new URL each time.

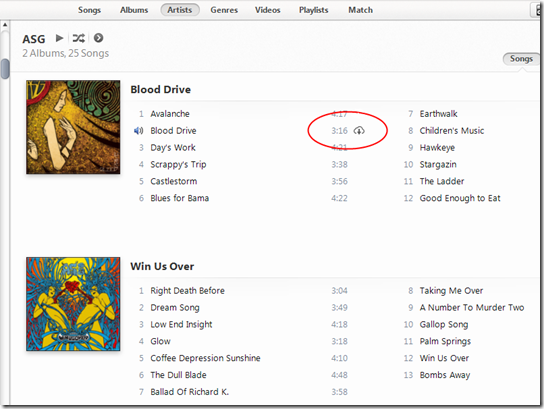

Figure 1 – A services directory page for a running LocalServer

Watch out for duplicate services. Maybe you don’t need them and your code can be changed to only start those services you actually need.

Tip 3 – If you’ve already got a LocalFeatureService maybe you don’t need a LocalMapService too

In my application I needed a LocalMapService for some activities and a LocalFeatureService for others so I naively created an instance of each pointing at the same map package. However, when I checked the server using the services directory (see Tip 2 above) I found that in addition to the feature service there were 2 map services running, not one.

It seems that if you create a local feature service it also creates a local map service for you. In fact the LocalFeatureService class inherits from LocalMapService so you get 2 URLs from an instance of LocalFeatureService: UrlFeatureService from LocalFeatureService and UrlMapService inherited from LocalMapService.

If you need to access a map package via a LocalFeatureService and a LocalMapService just create a LocalFeatureService and you’ll get both.

Tip 4 – Beware of using code like this

LocalMapService localMapService = new LocalMapService(@"Path to ArcGIS map package");

localMapService.StartAsync(delegateService =>

{

IdentifyTask identifyTask = new IdentifyTask();

identifyTask.Url = localMapService.UrlMapService;

});

This example comes from the ArcGIS Runtime SDK for WPF documentation. It’s not wrong but it could lead you down the wrong path. It would be all too easy to add code like this to a class and call it many times. Remember, each time you call StartAsync you will create a new instance of the service on the server which you probably don’t want and don’t need.

I prefer to create my local services in separate operations and maintain references to them that I can use later (see the StartLocalFeatureServiceResult result in Tip 1 above). That way I only have the minimum number of services running on the LocalServer. You will see from the example that it’s only the UrlMapService property that’s important. As a rule I’ll create a service for each package I need to access, keep a reference to that service somewhere and access the URL property as and when I need it.

References

[1] – ArcGIS Runtime SDK for WPF API reference