Setup and scripting

Introduction

When you start to move into a service-oriented way of working, particularly with microservices, the proliferation of services becomes an issue in itself. We know that we should avoid the ‘nano services’ anti-pattern and take care to identify our service boundaries pragmatically, perhaps using the DDD notion of bounded contexts to help discover those boundaries, but sooner or later you are going to have multiple services and perhaps multiple instances of services too.

Pretty quickly you’ll run in to a number of related problems. For example, how do client applications discover and locate services? If there are multiple instances of a service which one should the client use? What happens if an instance is down? How do you manage configuration across services? The list goes on.

A possible solution to these problems is Consul by Hashicorp. Consul is open source - being distributed under a MPL 2.0 license - and offers DNS and HTTP interfaces for managing service registration and discovery, functionality for health checking services so you can implement circuit breakers in your code, and a key-value store for service configuration. It is also designed to work across data centres.

This post isn’t going to look at the details of Consul and the functionality it offers. Rather it is focused on setting up a Consul cluster you can use for development or testing. Why a cluster? Well the Consul documentation suggests that Consul be setup this way:

“While Consul can function with one server, 3 to 5 is recommended to avoid failure scenarios leading to data loss. A cluster of Consul servers is recommended for each datacenter.” [1]

A Windows distribution of Consul is available but it’s not recommended for production environments where Linux is the preference. With consistency in mind I elected to investigate setting up a cluster of Linux virtual machines running Consul even when developing on a Windows machine.

When it comes to provisioning virtual machines there are a number of alternatives you can choose including Vagrant (also by Hashicorp), Chef, Puppet etc. Consul is actually pretty simple to install and configure with comparatively few steps required to get a cluster up-and-running. Because of this – and because I wanted to look at Vagrant in a bit more detail – I opted to use Vagrant on its own to provision the server cluster. The addition of Chef at this point seemed like overkill.

The advantage of using something like Vagrant is that provisioning the cluster is encapsulated in text form - infrastructure as code – so you can reprovision your environment at any time in the future. This is great because it means I could wipe the cluster off my system at any time and know with confidence that I could easily provision it again later. By encapsulating the features of the cluster this way I know it will be reconstructed exactly the same way each time it’s provisioned.

A lot of what follows is based on a blog post by Justin Ellingwood - How to Configure Consul in a Production Environment on Ubuntu 14.04. I’ve made some changes to Justin’s approach but it’s basically the same. The key difference is that I’ve used Vagrant to provision the cluster.

Preparation

Before getting started I elected to use a couple of tools to help me out. The first is ConEmu which I use habitually anyway. ConEmu is an alternative to the standard Windows command prompt and adds a number of useful features including the ability to open multiple tabs. If like me you use Chocolatey, installing ConEmu is a breeze.

c:\> choco install conemu

The next tool I chose to use is CygWin. CygWin provides a Unix-like environment and command-line interface for Windows. Why use this? Well it’s completely optional and everything that follows will run from the Windows command line but I found it helpful to work in a Unix-like way from the outset. To create the Consul cluster I knew I’d be writing Bash scripts so using CygWin meant I wouldn’t be context switching between Windows DOS and Linux shell commands.

To install CygWin I used cyg-get which itself can be installed using Chocolatey.

c:\> choco install cyg-get

Once you have cyg-get you can use it to install CygWin:

c:\> cyg-get default

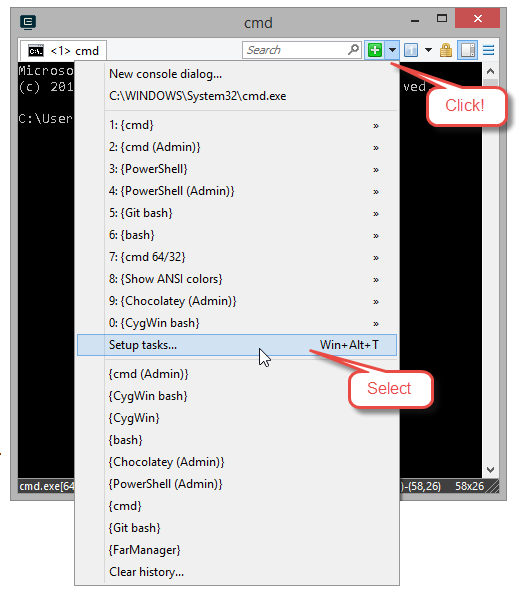

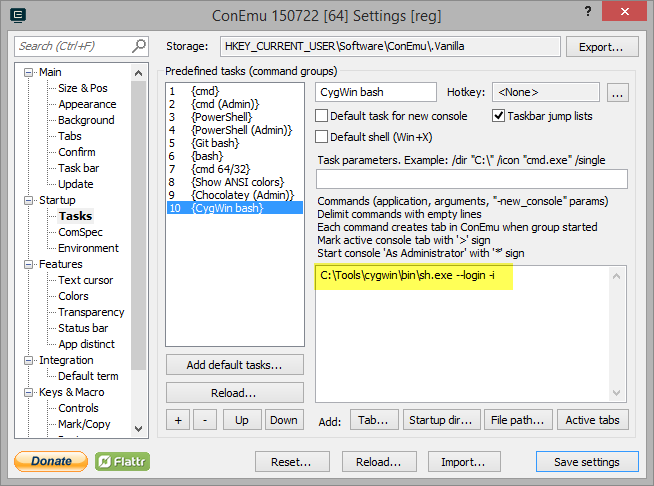

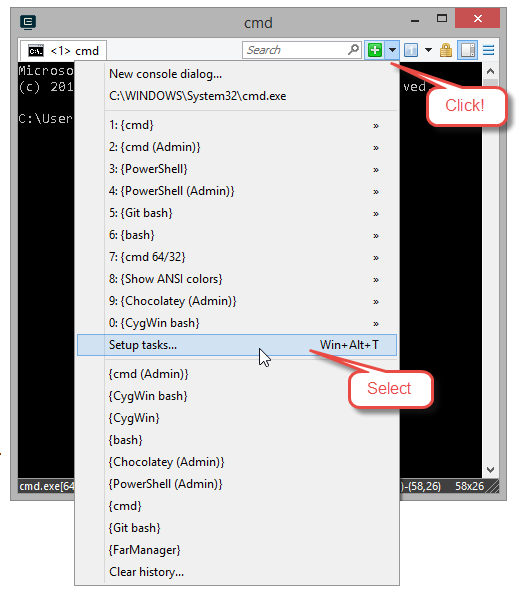

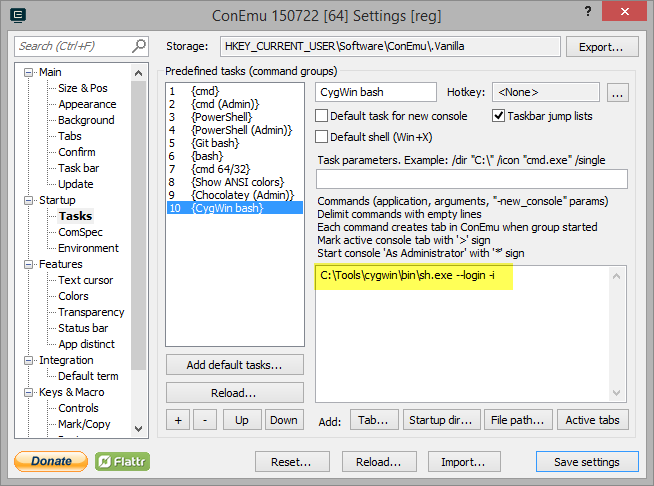

Once you have ConEmu and CygWin in place it is useful to configure ConEmu so you can open CygWin Bash from the ConEmu ‘new console’ menu. To do that you need to add a new console option.

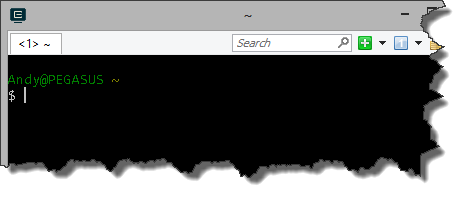

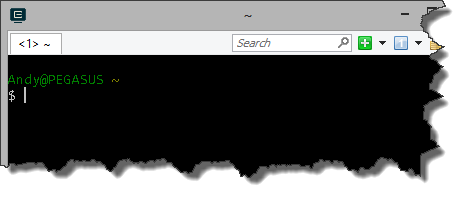

Now you can open a new CygWin bash console in ConEmu which results in a Unix-like prompt:

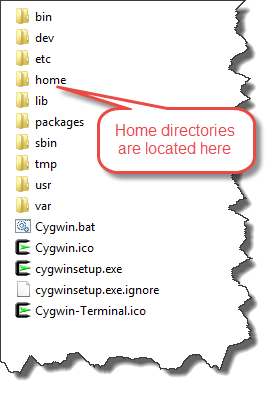

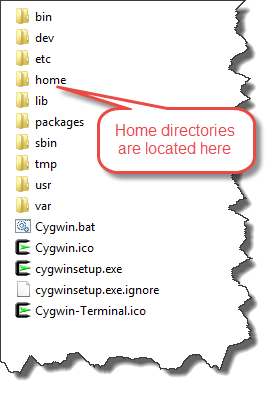

You’ll note that this prompt is located at your home directory (identified by the ~). On my system CygWin is installed to c:\tools\cygwin. In the CygWin folder there’s a ‘home’ subfolder which itself contains a further subfolder named after your login name. That’s your CygWin home folder (~) and that’s where I added the Vagrant files and scripts required to build out the Consul cluster later on.

The tools above are really just ‘nice to haves’. The main components you’ll need are VirtualBox and Vagrant. Both can be installed by the trusty Chocolatey.

c:\> choco install virtualbox

c:\> choco install vagrant

What do we need in our Consul cluster?

The first thing to note is that Consul – actually the Consul agent - can run in 2 basic modes: server, or client. Without going in to details (you can read the documentation here) a cluster should contain 3 to 5 Consul agents running in server mode and it’s recommended that the servers run on dedicated instances.

So that’s the first requirement: at least 3 Consul agents running in server mode each in a dedicated instance.

Consul also includes a web-based user interface, the Consul Web UI. This is actually hosted by a Consul agent by passing in the appropriate configuration options at start-up time.

So the second requirement is to have a Consul agent running in client mode which also hosts the Consul Web UI. We’ll create this in a separate instance.

That’s 4 virtual machines each hosting a Consul agent. Three instances will be running the agent in server mode and one in client mode and will host the Consul Web UI.

Creating the working folder

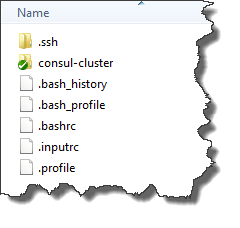

If you’re not using CygWin you just need to create a folder to work in. If you’re using CygWin the first job is to create a working folder in the CygWin home. I called mine ‘consul-cluster’. You’ll see from the image below that I also created a Git repository in this folder, hence the green tick courtesy of Tortoise Git. I added all of my Vagrant files and scripts to Git (infrastructure as code!).

The Vagrantfile

The main artefact when using Vagrant is the Vagrantfile.

“The primary function of the Vagrantfile is to describe the type of machine required for a project, and how to configure and provision these machines.” [2]

I created the Vagrantfile in the consul-cluster folder I created previously. Let’s cut to the chase. Here’s my completed Vagrantfile.

# -*- mode: ruby -*-

# vi: set ft=ruby :

#^syntax detection

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

config.vm.box = "hashicorp/precise64"

config.vm.define "consul1" do |consul1|

config.vm.provision "shell" do |s|

s.path = "provision.sh"

s.args = ["/vagrant/consul1/config.json"]

end

consul1.vm.hostname = "consul1"

consul1.vm.network "private_network", ip: "172.20.20.10"

end

config.vm.define "consul2" do |consul2|

config.vm.provision "shell" do |s|

s.path = "provision.sh"

s.args = ["/vagrant/consul2/config.json"]

end

consul2.vm.hostname = "consul2"

consul2.vm.network "private_network", ip: "172.20.20.20"

end

config.vm.define "consul3" do |consul3|

config.vm.provision "shell" do |s|

s.path = "provision.sh"

s.args = ["/vagrant/consul3/config.json"]

end

consul3.vm.hostname = "consul3"

consul3.vm.network "private_network", ip: "172.20.20.30"

end

config.vm.define "consulclient" do |client|

config.vm.provision "shell" do |s|

s.path = "provisionclient.sh"

s.args = ["/vagrant/consulclient/config.json"]

end

client.vm.hostname = "consulclient"

client.vm.network "private_network", ip: "172.20.20.40"

end

end

A Vagrantfile can be used to provision a single machine but I wanted to use the same file to provision the 4 machines needed for the cluster. You’ll notice there are 4 config.vm.define statements in the Vagrantfile with each one defining one of the 4 machines we need. The first 3 (named consul1, consul2 and consul3) are our Consul servers. The last (named consulclient) is the Consul client that will host the web UI.

Each of the machines we provide a host name (client.vm.hostname) and an IP address (client.vm.network). The IP addresses are important because we will be using them later when we configure the Consul agents to join the cluster.

Vagrant boxes

Prior to the config.vm.define statements there’s a line that defines the config.vm.box. Because this is outside the config.vm.define statements it’s effectively global and will be inherited by each of the defined machines that follow.

The config.vm.box statement specifies the type of box we are going to use. A box is a packaged Vagrant environment and in this case it’s the hashicorp/precise64 box - a packaged standard Ubuntu 12.04 LTS 64-bit box.

Vagrant provisioning

For each of the machines we’ve defined there is a ‘provisioner’ (config.vm.provision). In Vagrant provisioning is the process by which you can install software and alter configuration as part of creating the machine. There are a number of provisoiners available including some that leverage Chef, Docker and Puppet, to name but a few. However in this case all I needed to do was to run Bash scripts on the server. That’s where the Shell Provisioner comes in.

The 3 server instances (consul1, consul2, and consul3) all run a Bash script called provision.sh. Let’s take a look at it.

#!/bin/bash

# Step 1 - Get the necessary utilities and install them.

apt-get update

apt-get install -y unzip

# Step 2 - Copy the upstart script to the /etc/init folder.

cp /vagrant/consul.conf /etc/init/consul.conf

# Step 3 - Get the Consul Zip file and extract it.

cd /usr/local/bin

wget https://dl.bintray.com/mitchellh/consul/0.5.2_linux_amd64.zip

unzip *.zip

rm *.zip

# Step 4 - Make the Consul directory.

mkdir -p /etc/consul.d

mkdir /var/consul

# Step 5 - Copy the server configuration.

cp $1 /etc/consul.d/config.json

# Step 6 - Start Consul

exec consul agent -config-file=/etc/consul.d/config.json

The steps are fairly straight forward. We’ll take a look at step 2 in more detail shortly but basically the process is as follows:

- Install the unzip utility so we can extract Zip archives.

- Copy an upstart script to /etc/init so the Consul agent will be restarted if we restart the virtual machine.

- Grab the Consul Zip file containing the 64-bit Linux distribution. Unzip it to /usr/local/bin.

- Make directories for containing the Consul configuration and its data directory.

- Copy the Consul configuration file to /etc/consul.d/ (more on that shortly too).

- Finally, start the Consul agent using the configuration file.

Hang on. How can we be copying files? Well when you provision a machine using Vagrant the working folder will be mounted inside the virtual machine (at /vagrant). That means that all the files you create in the working folder are available inside the virtual machine.

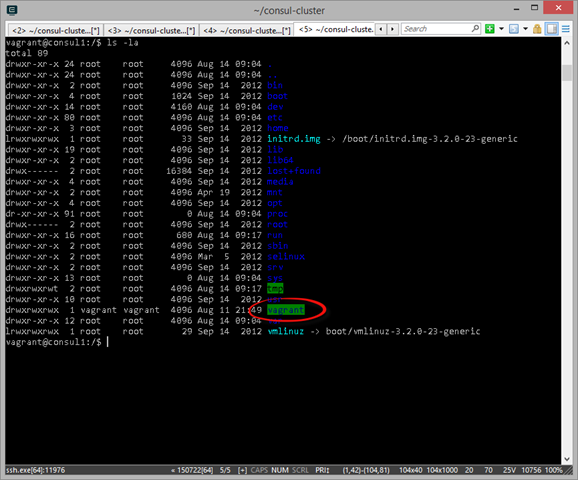

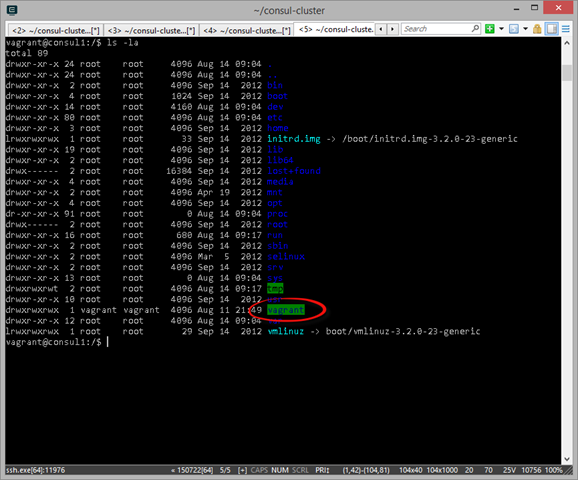

I know we haven’t actually provisioned anything yet but here’s an example from a machine I have provisioned with Vagrant. If I SSH onto the box and look at the root of the file system I can see the /vagrant directory.

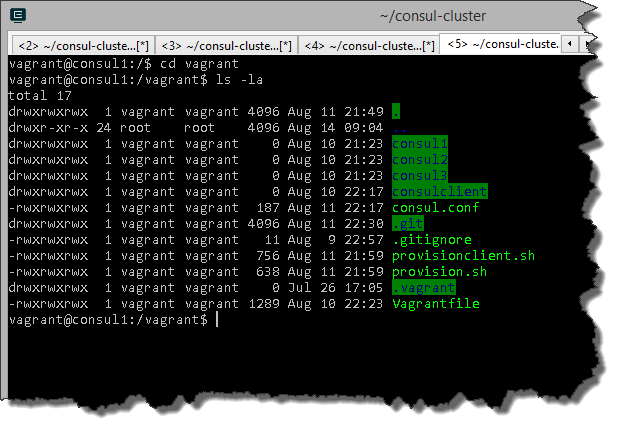

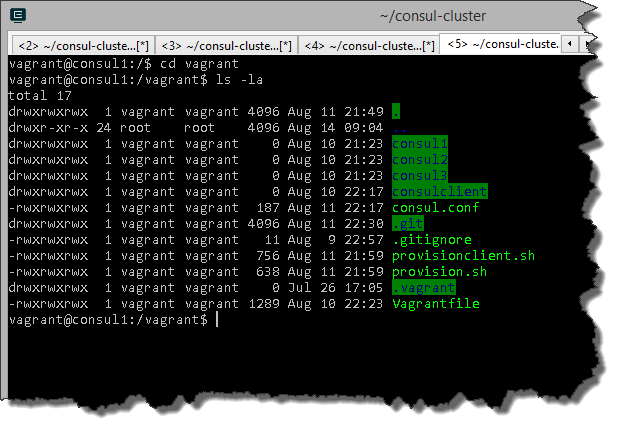

Listing the contents of the /vagrant directory reveals all the files and folders in my working directory on the host machine including the Vagrantfile and provision.sh.

You’ll also notice that in step 5 of provision.sh we are using an argument passed into the script ($1). This argument is used to pass in the path to the appropriate Consul configuration file for the instance because there is a different configuration file for each Consul agent. You can see this argument being set in the Vagrant file in the Shell provisioner. For example:

s.args = ["/vagrant/consul1/config.json"]

The upstart script

The upstart script looks like this:

description "Consul process"

start on (local-filesystems and net-device-up IFACE=eth0)

stop on runlevel [!12345]

respawn

exec consul agent -config-file=/etc/consul.d/config.json

This script is copied to /etc/init on the virtual machine in step 2 of provision.sh. This simply makes sure that the agent is restarted when the box is restarted.

Why is this necessary? Well, you won’t always run provisioning when you start a box. Sometimes it’s simply restarted and provision.sh doesn’t get run. Under these circumstances you want to make sure Consul is fired up.

Consul configuration

Consul configuration can be handled by passing in arguments to the Consul agent on the command line. An alternative is to use a separate configuration file, which is what I’ve done. Each instance has a slightly different configuration but here’s the configuration for the first server, the consul1 instance:

{

"bootstrap": true,

"server": true,

"datacenter": "dc1",

"data_dir": "/var/consul",

"encrypt": "Dt3P9SpKGAR/DIUN1cDirg==",

"log_level": "INFO",

"enable_syslog": true,

"bind_addr": "172.20.20.10",

"client_addr": "172.20.20.10"

}

In each Consul cluster you need one of the servers to start up in bootstrap mode. You’ll note that this server has the bootstrap configuration option set to true. It’s the only server in the cluster to specify this. In Consul terms this is manual bootstrapping where we are specifying which server will be the leader. With later versions of Consul it is possible to use automatic bootstrapping.

The configuration for the other servers looks something like this:

{

"bootstrap": false,

"server": true,

"datacenter": "dc1",

"data_dir": "/var/consul",

"encrypt": "Dt3P9SpKGAR/DIUN1cDirg==",

"log_level": "INFO",

"enable_syslog": true,

"bind_addr": "172.20.20.20",

"client_addr": "172.20.20.20",

"start_join": ["172.20.20.10", "172.20.20.30"]

}

Note that the bootstrap configuration option is now set to false. We also tell the Consul agent what servers it is to join to form the cluster by specifying the IP addresses of the other 2 servers (start_join).

The Consul agent running in client mode

To provision the machine hosting the Consul agent running in client mode we use a slightly different provisioning script (provisionclient.sh). This script simply adds a new step to download the Consul Web UI and extract it in the /usr/local/bin directory of the provisioned machine.

#!/bin/bash

# Step 1 - Get the necessary utilities and install them.

apt-get update

apt-get install -y unzip

# Step 2 - Copy the upstart script to the /etc/init folder.

cp /vagrant/consul.conf /etc/init/consul.conf

# Step 3 - Get the Consul Zip file and extract it.

cd /usr/local/bin

wget https://dl.bintray.com/mitchellh/consul/0.5.2_linux_amd64.zip

unzip *.zip

rm *.zip

# step 4 - Get the Consul UI

wget https://dl.bintray.com/mitchellh/consul/0.5.2_web_ui.zip

unzip *.zip

rm *.zip

# Step 5 - Make the Consul directory.

mkdir -p /etc/consul.d

mkdir /var/consul

# Step 6 - Copy the server configuration.

cp $1 /etc/consul.d/config.json

# Step 7 - Start Consul

exec consul agent -config-file=/etc/consul.d/config.json

We also use a slightly different Consul configuration file:

{

"bootstrap": false,

"server": false,

"datacenter": "dc1",

"data_dir": "/var/consul",

"ui_dir": "/usr/local/bin/dist",

"encrypt": "Dt3P9SpKGAR/DIUN1cDirg==",

"log_level": "INFO",

"enable_syslog": true,

"bind_addr": "172.20.20.40",

"client_addr": "172.20.20.40",

"start_join": ["172.20.20.10", "172.20.20.20", "172.20.20.30"]

}

In this configuration file we add the directory where the Consul Web UI has been extracted on the virtual machine (ui_dir).

Wrapping up

In the following blog post (Setting up a Consul cluster for testing and development with Vagrant (Part 2)) we’ll look at how you actually use the Vagrantfile and scripts here to provision the server cluster.

You can get the files referred to in this blog post from BitBucket.

References

[1] Introduction to Consul

[2] Vagrantfile